- This is a Computer Science and Engineering Senior Design Project in collaboration with Cisco. The goal is to develop UVA technology for disaster relief and recovery.

- Sign In

- Create Account

Natural and human-caused disasters are often more devastating to developing countries and low-income areas. These regions are more vulnerable to disasters because individuals residing in these areas often occupy housing that does not have the structural integrity to withstand earthquakes, damaging winds, or floods. In addition, low-income communities are more likely to be located on river banks, reclamation grounds, and steep slopes. These locations increase the devastation, injury, and mortality caused by a disaster. The technological gap between developed and developing countries is mirrored by the disproportionate devastation caused by a disaster. Technological innovation enhances human well-being, however, when technology is widely inaccessible only some can reap the benefits. Accessibility becomes increasingly important when technology has the ability to relieve human suffrage or save lives. Disaster response technology has these abilities, unfortunately, commercial UAV-based disaster response technology is cost prohibitive, and therefore it is not accessible by nongovernmental organizations or first responders in developing countries.

The primary objective of our project is to make disaster response technologies accessible to NGOs and first responders in low-resource regions of the world. To do so, we designed and developed a low-cost UAV-based solution to assist in disaster response. Our solution greatly increases the accessibility of UAV-based disaster response technology because it is comparable to the current industry standard solutions while costing an eighth of the price. Additionally, the solution is novel because of the high modularity, low cost, and the ability to perform on-board real-time image analysis. Throughout this project, we will also be working in parallel with another group consisting of two members, Cameron Burdsall and Mark Rizko. Cameron and Mark are working to create a drone mesh Wi-Fi system for disaster scenarios. When both projects have been completed, we will combine the technologies to create a more complete solution for disaster recovery. Our combined solution will have the capabilities to assist in safely identifying victim locations, and resurrecting destroyed communication infrastructure.

The first step toward achieving the goals outlined for this project was to investigate the capabilities of our chosen single-board computer, the NVIDIA Jetson Nano, in regard to deep learning inference performance. It was also very important for us to profile the power consumption of the computing hardware while under specific, realistic workloads. This was done by utilizing the benchmarking utilities provided by NVIDIA through their jetson benchmarks utility, found on GitHub. However, this utility only performs the benchmarking on the inference speed itself. To also log the power consumption of the hardware while running these benchmarks, we modified the codebase to also leverage NVIDIA’s top system monitor utility and python package that allows a direct interface with power consumption and system load metrics throughout the running time of the benchmarking utility.

One interesting observation we found after executing these benchmarks was that there was minimal, or even zero, performance penalty for using the 5 Watt power mode. This behavior can be attributed to the way in which the 5 Watt power profile functions in comparison to the Full Power (10W) profile on the Jetson Nano. The main difference between the two is related to how many CPU cores are enabled; in the 10W mode, all 4 cores are activated and usable, whereas the 5W profile disables two of those cores. Since computer vision and deep learning models are generally extremely GPU intensive, this result is rather logical, since it is likely that any performance bottlenecks are a result of components other than the CPU. This allowed our team to make the decision on proceeding with our implementation using the lower power consumption profile for its benefits to power consumption, and therefore UAV flight time, without concerns about large reductions in performance. Our goal was to build our final implementation around the most versatile use case for disaster scenarios. For this reason, we chose to focus on Object Detection, because we believed that it would be the most useful in the widest variety of disaster recovery applications. Object Detection refers to computer vision models that take an image, or series of images in the case of videos, and produce a classification of objects within the input image while providing bounding boxes that can be used to overlay where the model believes that an object is within the image.

This differs from its nearest relative, Classification, because it has the ability to classify more than one object in a scene, rather than classify the contents of the image as a whole. In a disaster recovery scenario, it is entirely possible that there will only be one useful ”object” to identify in a scene, but this is far from a given. If we chose to only pursue general classification models for our implementation, it would greatly hinder our system’s ability to effectively provide information to first responders in situations with high victim density or with many objects in the scene, even nonhuman objects. The reason for this latter example stems from the nature of object detection models within computer vision. Generally, they tend to have the capability to detect many types of objects in a scene, such as dogs or cars, in comparison to simply attempting to identify humans.

The benchmarking application was designed to utilize the two most widely used and performant object detection models for embedded applications at the time of its design: TinyYOLO v3 and SSD MobileNet v1 [7][8]. YOLO is an acronym that stands for ”You Only Look Once,” pertaining to the underlying design of the model which requires only one pass of the input image to produce a result, which is not necessarily the approach taken by many models designed for more powerful or accurate object detection algorithms. The ”tiny” portion of the model’s name describes the heavily optimized nature of the model’s neural network, which grants significant increases in speed, at the cost of some accuracy.

Although the Jetson Nano is by far one of the most powerful SBCs within its class, it will struggle to generate usable frame rates in practice due to the processing power of its GPU and its relatively small available RAM (4GB). Similarly, SSD stands for ”Single-Shot multi-box Detection” which is a different way of explaining the same approach utilized by YOLO. ”Mobilenet” is also the signifier used to describe the optimized model of the original SSD model and carries the same advantages and disadvantages as the YOLO’s ”tiny” variant. In our testing, TinyYOLO performed slightly better in practice, while also using significantly less power on average compared to SSD Mobilenet. Although, our team decided to use the latter in our final implementation, because of its reduced model size and greater ability for end-user retraining in comparison to TinyYOLO.

One of our non-functional requirements was to produce a system that was affordable and accessible to those living in developing countries or working for non-profit NGOs. It was critical that our system was accessible to these individuals as they might not have the funding to invest in enterprise solutions created by popular UAV manufacturers. It was hard to decide upon an upper limit to what our team could reasonably consider affordable, but our decision-making process when selecting components revolved around finding the most inexpensive option that did not sacrifice any of our other requirements in the process. After implementing our system, we strongly believe that we produced the most inexpensive system feasible given our constraints at the time of the project. All the components and their market prices show our system’s price was around $835 before shipping and tax at the time of purchase. A comparison with the comparable UAVs in the enterprise disaster recovery segment can be found in this research.

The relative success of our implementation was contingent upon its performance when compared to similar UAV systems within the enterprise sector that are marketed toward disaster recovery use cases. The two primary points of comparison were the DJI Mavic Enterprise Advanced and the Parrot ANAFI USA. The comparison between our system and the average value for our primary requirements within these comparable systems is shown. Although there are some features that were not achieved in our implemented system that exist within the enterprise systems, such as infrared imaging or high-quality video streaming, we accomplished our objective of meeting our critical requirements while also doing so at a fraction of the price of comparable systems. We were able to reach near parity with these systems on many dimensions and even surpassed them in some important categories, such as modularity, on-device computer vision, and the aforementioned pricing characteristics.

Most object detection algorithms are equipped to detect a wide variety of different object classes within images. In a disaster scenario, humans are the top priority and generally the only priority for rescue teams when rescuing victims. When models, such as SSD MobileNet, are attempting to distinguish between the 98 classes they support, there is a nontrivial amount of processing overhead to make this distinction that could be eliminated by focusing solely on humans. For this reason, we explored the process of retraining our model to only detect humans within a scene. Although the benchmarking utility used to decide between TinyYOLO and SSD MobileNet utilized the original version of the latter model, we chose to utilize the more recent and efficient v2 of SSD MobileNet for retraining because we believed it would yield better results.

We utilized portions of the documentation found in NVIDIA’s ”Two Days to a Demo” tutorial for Jetson systems to perform retraining on a pre-trained model designed for the Jetson Nano. The model was retrained using PyTorch and leveraged 20,000 total images with 79,000 labels procured from the Google Open Images Dataset v6. Our model was retrained to only use the ”person” object class as this pertains to humans, the top priority in disaster scenarios. Our training set consisted of 17,058 images, our validation set contained 2,212 images, and our test set contained 730 images. The model has retrained over 30 epochs at a learning rate of 0.01 on the Jetson Nano itself, which took around 30 hours to complete. Although this took an exceptionally long amount of time, the training parameters used are viable for creating viable models, and it proved that it could be possible for end users to perform similar retraining without the need to purchase expensive and high-power consumption graphics cards. Our results proved that this experimentation was successful in achieving this goal, providing an 8.16% improvement in inference speed compared to the baseline model, while also reducing the model size by 25.8%. With larger training datasets, more training time, and more powerful hardware, we expect that the improvements could be incredible for those who want to tailor their model to very specific use cases, as long as they have these resources at their disposal.

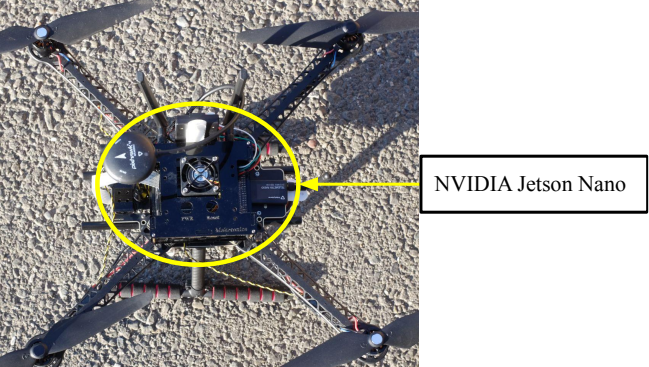

After assembling the fully-functional system, shown below, we began performing an indoor test flight. This flight utilized the retrained SSD-MobileNet model previously discussed and allowed us to ensure that all components were truly compatible and capable of flight in a simulated scenario. Although we were only able to complete this flight inside a large garage, the prototype implementation yielded nearly identical inference speeds to those found in our testing environment and produced power draw metrics in line with our estimated 25.7-minute flight time. The model was visibly able to lock onto the human subject with a high degree of accuracy both in well-lit and darker environments. This modification was done by reducing the amount of ambient light by closing the garage door in order to only allow artificial lights to illuminate the environment.

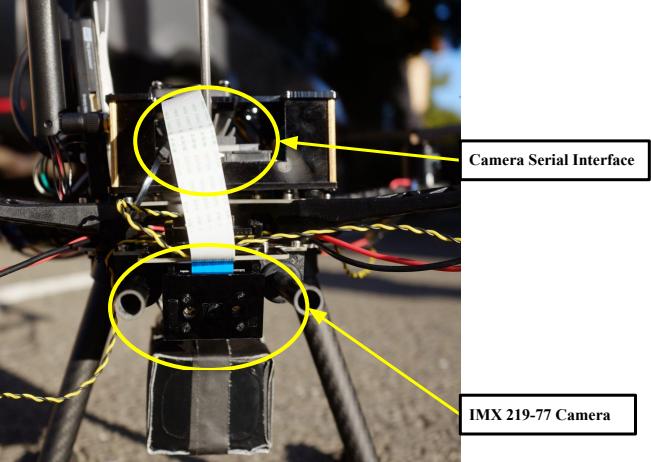

The prototype was flown at a designated height of 12 feet and was tested using both a static hover and a 4 m/s oscillating route perpendicular to the camera’s field of view. The method of mounting the IMX 219-77 camera to the UAV chassis was done using the angled mount that conveniently came with the camera sensor. This angle provides a fantastic mounting position to hold the camera for a superior field of view at the altitude used for testing, although this may need to be adjusted if the UAV is planning to be deployed at a significantly higher altitude. The camera module is connected to the Jetson Nano via a Camera Serial Interface (CSI) ribbon cable. Since the Jetson Nano is specifically designed with embedded computer vision workloads in mind, it comes with two such CSI camera ports built into the module’s carrier board.

Although this camera connector is not often utilized in consumer-facing imaging products, like webcams, it is very common on camera modules designed for embedded systems applications because of the standard connection interface that allows the imaging sensors to directly interface with the module’s CPU, without any hardware translation that may be necessary for more universal component standards such as Micro-USB or USB Type-A. This flexibility in connection methods further supports our objective of modularity as it provides three possible connection methods, CSI, MicroUSB, and USB Type-A, to the Jetson Nano and therefore allows a wide range of components to be utilized within a system using a Jetson Nano. Figure 4.2 is indicative of the mounting position of the NVIDIA Jetson Nano on the top of the Holybro S500 UAV chassis. It was placed in this position out of necessity, in order to ensure proper weight distribution of the components and available space on the chassis.

It was mounted using modified mounting holes on the bottom of the Jetson Nano’s case and screws that were long enough to secure the case directly to the chassis, while also having room to use rubber and shock-absorbing spacers between the two mount points. This was done to limit the vibration that was transferred to the Jetson Nano, in addition to ensuring that the weight of the circuit board would not shift during flight. In our testing, a significant amount of oscillation can propagate throughout the system with components that were even slightly loose, since the flight computer will expect a center of gravity that is stationary throughout flight and over-compensate for this movement, creating a feedback loop that could very easily cause a severe malfunction if not addressed.

The COVID-19 pandemic has been a large challenge for our group. Each group member had to be isolated for the duration of this project. This meant that we could not hold in-person meetings or work on the project in a collaborative fashion. This was particularly challenging when multiple group members needed a specific component to make progress on their tasks. Similarly, only one member could be present when testing our complete system. This was a formidable task as the group member had to interact with the flight software, ensure the drone was functioning correctly, and act as a test subjects.

Due to city and university regulations, all testing had to take place in an enclosed space. Flying in enclosed spaces is hard due to the close proximity of obstacles. This was a cumbersome process as we had to take extra precautions to ensure the drone was not damaged. Indoor testing also prevented us from creating our own test data, this meant we had to rely on third-party aerial imaging data sets to train our model.

The UAV system we have designed had three main objectives: high modularity, low cost, and the ability to perform real-time computer vision. Throughout the process of designing our system, we learned how important these aspects are when creating a technology that is accessible to individuals in low-resourced regions. Modularity was one of our primary objectives. Throughout the project, we researched a variety of solutions or extensions to our computing architecture, including software packages and computer vision models, that proved to be essential to building the successful prototype detailed in this paper. The integration of these components would be exceedingly difficult or likely impossible without the highly modular system we chose in the form of the NVIDIA Jetson Nano and the accompanying software ecosystem provided by NVIDIA.

Comparably capable single-board computers, such as the Google Coral Dev Board, have limitations in the software that can successfully be deployed to their hardware. Driver support was also a consistent issue our team has experienced throughout previous software engineering projects and was not an issue throughout this thesis because of the first-class support from the developers at NVIDIA, in addition to the passionate, helpful Jetson Nano community on the internet. Through this, we learned that modularity, whether it be through open-source software, easy system integration standards, or the many other relevant aspects of building a flexible system, is one of the most important aspects of designing and building a system that can serve the most people efficiently, easily, and at the lowest cost. We selected this project because we saw the potential to make a direct impact on the lives of those in low-resourced regions.

To make an impact on their lives we had to ensure that our system was accessible and not cost-prohibitive. Accessibility was achieved by emphasizing frugal design throughout the project, however, it was particularly important during purchasing decisions. When making our purchasing decisions, we had two main considerations. First, we had to analyze the price of the components in relation to their function within our system. For example, we selected the NVIDIA Jetson Nano instead of the cheaper Raspberry Pi. This was because we felt the extra money was worth the drastic increase in performance. The second consideration we made when purchasing components was their availability to a variety of international markets. 21 Real-time computer vision on low-cost hardware is one of the most exciting developments in computer science in the last several years. At the same time, the innovation within the consumer and low-cost UAV segment has also been essential to increasing the adoption of such systems for a variety of tasks.

Throughout the preliminary research, we did for this project, we observed that these two domains have failed to be bridged in a meaningful way, especially at a price point accessible to the low-resource users that could benefit the most from them. It is likely that this comes as a result of two major factors, both related to manufacturability. One, it is difficult to produce products that are on the cutting edge of technology while adhering to strict pricing targets without attempting to first manufacture solutions with the flexibility provided by a ”flagship” product’s development budget and resources. Second, even if a company can produce such frugal solutions to these market deficiencies, it is very difficult to do so while generating a profit that makes their development worthwhile. For these reasons, it leaves such innovation to the hobbyist and DIY markets, but these engineers have even fewer resources to successfully produce a satisfying product with any amount of consistency. Although we believe that we accomplished the goals we outlined throughout this paper, it is very easy to see why that is not the case for every person or team that attempts to do so. With novel technology comes a severe lack of support and documentation, which requires experienced developers to overcome.